- Don't try to do both sides.

Ravi Mohan's Blog

Thursday, December 07, 2006

Shining Darkly

My friend Amit Rathore wrote an excellent article on transitioning from a developer to a manager.

Richard Hamming's famous speech has excellent advice on the (similar) manager vs scientist decision that many researchers struggle with.

" ...Question: Would you compare research and management?

Hamming: If you want to be a great researcher, you won't make it being president of the company. If you want to be president of the company, that's another thing. I'm not against being president of the company. I just don't want to be. I think Ian Ross does a good job as President of Bell Labs. I'm not against it; but you have to be clear on what you want. Furthermore, when you're young, you may have picked wanting to be a great scientist, but as you live longer, you may change your mind.

For instance, I went to my boss, Bode, one day and said, ``Why did you ever become department head? Why didn't you just be a good scientist?'' He said, ``Hamming, I had a vision of what mathematics should be in Bell Laboratories. And I saw if that vision was going to be realized, I had to make it happen; I had to be department head.''

When your vision of what you want to do is what you can do single-handedly, then you should pursue it. The day your vision, what you think needs to be done, is bigger than what you can do single-handedly, then you have to move toward management. And the bigger the vision is, the farther in management you have to go. If you have a vision of what the whole laboratory should be, or the whole Bell System, you have to get there to make it happen. You can't make it happen from the bottom very easily. It depends upon what goals and what desires you have. And as they change in life, you have to be prepared to change.

I chose to avoid management because I preferred to do what I could do single-handedly. But that's the choice that I made, and it is biased. Each person is entitled to their choice. Keep an open mind. But when you do choose a path, for heaven's sake be aware of what you have done and the choice you have made. money life and you takes your choice.

Even worse is the developer who really likes being a developer and is or can be very good at it, but chooses to become a mediocre, unhappy manager because "that is the only way to make more money". These people deserve pity, but no sympathy.

Tuesday, November 14, 2006

Thinking as a learnable skill

If I remember correctly, it was in one of Edward de Bono's books that I first encountered the idea that thinking might actually be a skill, and thus learnable (and improvable by practice).

Over the years, this belief has been reinforced and I was forced to pull it all together yesterday when a friend asked me "How can I improve the quality of my thinking"?

This is my answer, fwiw.

First learn critical thinking. Critical Thinking teaches you to evaluate an argument and separate chaff from grain. Suppose someone were to state, for e.g "Agile is cool and we'd like to adopt it in the next project", how do you react? To learn critical thinking one could go through a course in logic, learn all the different logical fallacies and then try to apply them to real life conversations and claims. But Critical Thinking - An Introduction offers a gentler path. Just make sure to skip most of Chapter 1. Just read Section 11.4, 1.2 and 1.3 an move on to Chapter 2.

I've never seen a better book that teaches one how to cut through the layers of BS and get to the core of an argument. The Craft Of Argument is a good book as well, though oriented more towards writing good papers and articles.

Second, learn Lateral Thinking. Dr. de Bono,the inventor of the method, has written many many useless books and a couple of brilliant ones. The latter contain some powerful ideas.

Dr de Bono's central contribution is the notion of a "thinking tool", analogous to tools like a hammer or a screwdriver - each tool having a context of use and a particular effect. The books to get are Lateral Thinking For Management(non management folks can ignore the "for management". It just means that he uses management situations to illustrate various thinking tools. Nothing prevents you from using them in your own domains) and Serious Creativity. Both are chock full of systematic practical lessons about how to think in different situations.

Third, I'd suggest a brilliant thinking tool - Thinking Gray - from A Contrarian's Guide To Leadership by Steven Sample. The book deals with a lot more than thinking, and in addition is very hard to find (Thank You Learning Journey, for getting me a copy) and so I'll briefly explain "Thinking Gray".

In essence, Thinking Gray (TG from now) asks you not to make a decision on something, or choose a side in an argument till you absolutely have to. Sounds simple doesn't it ? However, the human mind has tendency to converge to a decision and classify data into binary categories (good/bad, black/white, friend/foe) and take sides in an argument. For e.g everyone has an opinion about (say) the American invasion of Iraq. Some may think it a "good" thing and others may decide that the war is a "bad" idea. The TG practitioner, while listening to all the data, refuses to take a binary stand till it becomes absolutely imperative that he does so.

This refusal to decide lets you avoid three dangerous tendencies - (1) the tendency to filter incoming data to support a pre decided conclusion (2) the tendency to vacillate between two conclusions, depending on who you last spoke to (and how persuasive he was) and (3) most dangerous, the tendency to slant your beliefs towards what you think people around you believe.

Steven Sample precisely distinguishes TG from skepticism. The skeptic has two mental "buckets" - one labelled "I believe" and another labelled "I don't believe". The skeptic's policy is that nothing goes into the "I believe" bucket without proof or a logical, convincing argument. Everything, by default goes into the "I don't believe" bucket and stays there till logic or proof moves it into the "belief" b. The TG practitioner on the other hand, has no "buckets".

One thing the TG practitioner has to be careful of is that other people may get confused about your "true" beliefs and intentions and may think that you agree with what they say because you seem to understand what they say and ask clarifying questions and so on.

Thinking Gray can be enhanced by projecting the multiple conclusions forward into the future (thus providing a rich tapestry of possibilities) and backward into the past(allowing multiple interpretations) . Combined with Paul Graham's "running upstairs" or "staying upwind" concept, TG allows you to navigate an uncertain future in a stress free fashion and maximize productivity in the present. More on this later.

To generate ideas, in other words, to be creative, de Bono's thinking tools work very well. I recently came across an approach called TRIZ. The basic idea seems to be worth investigating, but during 2003/2004, TRIZ ( and the associated concept ARIZ ) became the fad of the day just as "agile" and "lean" seem to be today's. Thus there is a lot of nonsense written about them as various authors bought out books and a lot of consultants were selling "ARIZ enablement" and hiring themselves out as "ARIZ coaches". As a result, there is a lot of worthless literature out there and some effort has to be extended to dig out the core of ARIZ and TRIZ. As soon as I get some free time(a precious commodity these days), I will look into this.

To conclude, all these methods treat thinking as a learnable skill and the one problem with this model is that to get good at thinking, as with finding the route to Carnegie Hall, practice is the only way. And practicing thinking is an odd concept for a lot of people.

Sunday, November 12, 2006

Karma Capitalism?

Sometimes, I toy with the idea of getting an Ivy League MBA. And then I come across something like this (via Evolving Excellence).

...For the members of the Young Presidents’ Association, meeting in New Jersey, this was no ordinary leadership seminar. They were being imbued with the values of the Hindu philosophy of Vedanta, by its most venerable proponent, Swami Parthasarathy. ...

On the syllabus at Harvard, Kellogg, Wharton and Ross business and management schools is the Bhagavad Gita, one of Hinduism’s most sacred texts.

....

He began studying the Bhagavad Gita, and has spent the past 50 years building a multimillion pound empire through explaining its practical benefits to wealthy corporations and executives.

He has recently returned to India from America where — in addition to the Young Presidents’ Organisation — he lectured students at Wharton Business School and executives at Lehman Brothers in Manhattan. His tours are booked well beyond next year, and will include Australia, New Zealand, Singapore and Malaysia.

While traditional business teaching has used the language of war and conquest, Parthasarathy uses the Bhagavad Gita to urge his students to turn inwards, to develop what he calls the intellect, by which he means their own personal understanding of themselves and the world, and to develop their “concentration, consistency and co- operation”.

The technical folk do have their religious fads, but compared to such arrant nonsense, they are the very epitome of logic and reason.

If the Ivy League schools and Lehmann Brothers and other corporations believe this nonsense enough to enrich "guru" (gag!!) Parthasarthy by many millions of pounds, why should I get an MBA? Hmmm on the other hand, that is precisely the reason why I should get one?

If anyone reading this blog has an Ivy League MBA and/or works on the Street, could you please explain how such rackets work? I can't make any sense out of this. Any help appreciated.

Friday, October 27, 2006

Cracking the GRE

Update: Analytical Score updated.

I wrote the GRE a few days ago.

Scores -

- quant- 790/800

- verbal 800/800 (heh!)

- Analytical Writing -

awaited (I am not too worried about this).- 6.0/6.0

Wednesday, October 04, 2006

Listening to Steve Yegge (and skirmishing with Obie)

Steve Yegge's blog post titled "Good Agile, Bad Agile" seems to have ignited one of those periodic feeding frenzies in the blogosphere. The comparison he makes between Agile and Scientology seems to have touched a nerve in many "agilists". The comments on Steve's post reveal a good deal of defensiveness, google envy and the occasional reasoned response.

Summarizing Steve's "anti agile" points (from a very long post), he says

- The Chrysler Project, which was the exemplar for agile projects failed badly.

- Mandatory 100% pairing sucks bigtime.

- Agile isn't scientific. (at best, any proof for "improved productivity" is anecdotal).

- There are a lot of flaky "agilists" trying to be "coaches" and sell books and seminars.

- These "seminar agilists" create an artificially polarized world where any process that isn't Agile(TM) is "waterfall" or "cowboy".

- Agile is very date driven, ignoring the human variations in productivity.

- If anyone puts forward an alternative process , the "defenders" of agile use one of two responses to oppose it

- "all the good stuff he described is really Agile"

- "all the bad stuff he described is the fault of the team's execution of the process"

And Oh, Steve, keep writing!

Sunday, September 17, 2006

Spreadsheets and Self Sabotage A Case Study

This is a true story. Names have been changed to protect the innocent (and the guilty).

Once upon a time,long long ago, in a faraway land, there was this company, a typical enterprisey company we'll call BankCo (in reality the company doesn't work in banking, the domain is similar but different, and I am just obfuscating things as explained above). BankCo was running all its "enterprise" operations off a set of excel sheets and at some point decided to redo it "properly". The folks at BankCo were essentially businessmen, not programmers so they decided to get some consultants at the cutting edge of technology. Enter AgileCo. AgileCo decided to rewrite the business logic as a 3 tired OO app using all the latest blub technologies.

The result? the BHS project (in AgileCo most projects have three letter acronyms) has frustrated developers, grumbling clients and absolutely yucky code.

Now there were a lot of things the team did wrong. Fundamentally the project was doomed the moment the team was "volunteered" for such an aggressive schedule (committed to the client by the sales manager, "agile planning" notwithstanding) but the thinking might have been that "hey we just have a bunch of spreadsheets, how hard can it be to convert them into a 3 tiered Blub app" . The (non intuitive) correct answer is "potentially very very hard".

Spreadsheets are very subtle beasts. They just look very simple. There are these rows and columns of cells and even non programmers can quickly create useful "programs" so it should be fairly easy to translate a set of spreadsheets into OO programs right?

Wrong.

For one, a spreadsheet is essentially a functional program.[warning - pdf]

[Excerpt]

"It may seem odd to describe a spreadsheet as a programming language. Indeed, one of the great merits of spreadsheets is that users need not think of themselves as doing “programming”, let alone functional programming — rather, they simply “write formulae” or “build a model”. However, one can imagine printing the cells of a spreadsheet in textual form, like this:

A1 = 3

A2 = A1-32

A3 = A2 * 5/9

and then it plainly is a (functional) program. Thought of as a programming language, though, a spreadsheet is a very strange one. In particular, it is completely flat: there are no functions apart from the built-in ones(*). Instead, the entire program is a single flat collection of equations of the form “variable = formula”. Amazingly, end users nevertheless use spreadsheets to build extremely elaborate models, with many thousands of cells and formulae."

Simon goes on to state how he and his colleagues worked on removing the "procedural abstraction barrier" in the functional language embodied by a typical spreadsheet. (There are folks working on integrating more powerful functional languages and other paradigms into spread sheets.

The key point is that a spreadsheet is essentially functional. Besides, a spreadsheet propogates changes in values and formulae automatically, unlike imperative and oo languages (e.g if the cell A1 is set to 5 instead of 3 in the above sample, A2 automatically becomes -27).

Besides value propagation , the 2 dimensional spatial relationships between spreadsheet cells makes it unlike most programming interfaces in existence today.

" One of the most successful end-user programming techniques ever invented, the spreadsheet, uses what is essentially a functional model of programming. Each cell in a spreadsheet contains a functional expression that specifies the value for the cell, based on values in other cells. There are no imperative instructions, at least in the basic, original spreadsheet model. In a sense each spreadsheet cell pulls in the outside values it needs to compute its own value, as opposed to imperative systems where a central agent pushes values into cells. In some sense each cell may be thought of as an agent that monitors its depended-upon cells and updates itself when it needs to. As Nardi (1993)(Nardi 1993) points out, the control constructs of imperative languages are one of the most difficult things for users to grasp. Spreadsheets eliminate this barrier to end-user programming by dispensing with the need for control constructs, replacing them with functional constructs. "

[ Link

Thus, when you try to convert the functionality of a complex set of spreadsheets to a blub-dot-net program, you are, in essence translating from a functional language with value propagation to a very different paradigm (OO in this case) and also adding things like persistence and multi user interaction which a normal spreadsheet does not have to deal with. This project needs competent developers who have enough resources (including time) to do a proper job,and are expert in both functional and object oriented programming with a keen awareness of the chasm between the two paradigms and a knowledge of how to bridge it. You wouldn't have much use for a German to English translator who didn't know any German, would you?

Besides this technical misstep there are a host of others that make AgileCo's BHS project an interesting case study.

The first is the premature time commitment to clients, bypassing the essence of AgileCo's favorite planning methodology. This is the killer mistake. The second is an arbitrary team structure with unclear roles and shaky team buy in. The third factor worth discussing is how the dominant methodology at AgileCo discourages deep thought by developers and encourages them to grab a "User Story" and hit the keyboard as soon as possible. This eliminates the chances of success for finding a technical approach that attacks the core of the problem. The fourth is how a totally unnecessary onshore/offshore team split adds dysfunctional management, unnecessary paperwork and communication overhead. The fifth and most crucial insight is about how dumb management can totally screw up a project's chances of success beyond repair.

Postscript:- The team is now on deathmarch mode and works on Saturdays and some Sundays. Of course the managers don't work on weekends. The brighter developers are looking for other jobs.

(*) Most spreadsheets have some kind of extension mechanism. In Excel, for e.g. you can use VB script to write your own extensions. But this means (a) and end user has to learn a programming language and (b) subtle errors can creep in when you mix imperative and functional code. Debugging spreadsheets is a nightmare at the best of times.

Saturday, September 09, 2006

Rules For Failure #1 Create a group

I've been notcing that people who never get anything done seem to follow a few (anti-)patterns when they start off their projects. The most common one says "To get something done, first create a committee".

The key insight is that people may not think they are creating a committee; they may think that they are "discussing" their ideas with friends. But what happens is that there is a lot of discussion and no action. If there is some action, it tapers off soon enough.

This can be seen in various attempts to "learn design patterns" or "evangelize agile" or whatever. The dynamics of forming a group give an initial emotional high and a short lived illusion of progress. In the end, the effort dies down and it is time for the next fad.

With all the emphasis on "teamwork", not enough people can summon up the gumption to start working on something by themselves and make some progress before talking about it, or trying to get other people involved.

Consciously reversing this pattern seems to help. To master a programming language or framework , for e.g, just shut up and code. When the coding is finished release the code and do something else. Doing that long enough, you'll find you attract like minded people.

So these days, when people say things like "I am very interested in learning mathematics" my counter question is "so how many problems/proofs did you work through today"? Nothing else is important. Replace "working through problems/proofs" with the appropriate context dependent measures of progress.

Don't yap. Work.

Thursday, August 03, 2006

W[ear] Cloak Of Invisibility

The compiler project has kicked off and I have zero free time. I am spending all my time coding and reading.So .... I am totally unavailable.

I am off the net and the phones are off. There is no way you can reach me. I am travelling extensively as well (long story). Mail will (eventually) be answered.

End of bulletin.

Saturday, July 29, 2006

Indo Pak War - A Slightly Different Perspective

But Martin, Enterprise Software IS Boring

Martin Fowler writes about Customer Affinity, a factor he believes distinguishes a good enterprise developer from a bad one. So far,so good.

He says "I've often heard it said that enterprise software is boring, just shuffling data around, that people of talent will do "real" software that requires fancy algorithms, hardware hacks, or plenty of math."

This is almost exactly what I say.(Just remove the quotes around "real" ;-) ).

Martin goes on to disagree with this idea (which is fine) but then he says "I feel that this usually happens due to a lack of customer affinity." And this is where I disagree.

People who work on things like compilers and hardware hacks and "tough" algorithmic problems can have customers, just as the enterprise folks do. I know I have. The compiler I am writing now has a customer. The massively parallel neural network classifier framework I wrote a couple of years ago had a customer. The telecom fraud detection classifier cluster I worked on last year (along with some other talented programmers) is being used by a company which is very business (and customer) oriented. So the idea that a "customer" (and thus customer affinity) is restricted only to those folks who write database backed web apps is simply not true.

Math/algorithms/hardware hacks and the presence or absence of a "customer" (and thus, customer affinity) are orthogonal (you know, two axes at 90 degrees to each other) issues.

With the customer issue out of the way, let us examine the core issue - the notion that talented developers would prefer to do stuff like compilers and algorithms instead of a business app (by which I mean something like automating the loan disbursal processes of a bank).

The best way to get a sense of the truth is to examine the (desired) flow of people in both directions. I know dozens of people who are very very good at writing business software who yearn wistfully for a job doing "plumbing" like compilers and tcp/ip stacks, but I've never yet seen someone who is very very good at writing a compiler or operating system (and can make money doing so) desperately trying to get back to the world of banking software. A programmer might code enterprise apps for money, but at night, at home, he'll still hack on a compiler.

It's kind of a "Berlin Wall" effect. In the days of the cold war, to cut through the propoganda of whether Communism was better than Capitalism , all you had to do was to observe in which direction people were trying to breach the Berlin Wall. Hundreds of people risked their lives to cross from the East to the West but practically no one went the other way. Of course this could just mean that the folks didn't appreciate the virtues of people's power and the harangues of the comissars but I somehow doubt it.

To see whether living in India is better than living In Bangladesh (or whether living in the United States is better than living in India) look at who is trying to cross the barriers and in which direction.

Economic Theory suggests another way of finding an answer. Suppose you were offered say 10,000$ a month for say 2 years, to develop the best business app you can imagine and say, 8000 $ a month, for the same two years to work on the best systems/research project you can imagine. Which one would you choose? Now invert the payment for each type of work. Change the amounts till you can't decide one way or another and your preference for either job is equal. Finding this point of indifference (actually it is an "indifference curve") will teach you about your preference for "enterprise over systems". I believe that while individuals will choose many possible points as their "points of indifference" on that curve, a majority of the most talented debvelopers would prefer a lower pay for a good systems/research project in preference to an enterprise project, no matter how interesting. And to most developers, an enterprise project becomes interesting to the degree it needs "tough programming" skills (massively scalable databases say.. there is a good reason Amazon and Google emphasize algorithms , maths and other "plumbing" skills in their interviews and an equally good reason why a Wipro or a TCS doesn't bother testing for these skills).

The widespread (though not universal) preference of the very best software developers for "plumbing" over "enterprise" holds even inside Thoughtworks (where Martin works and where I once worked). I know dozens of Thoughtworkers who'd prefer to work in "core tech". While I was working for TW, the CEO,Roy Singham, periodically raised the idea of venturing into embedded and other non enterprise software and a large majority of developers (which invariably included most of the best devs) wanted this to happen. Sadly, this never actually came to pass.

A good number of thoughtworkers do business software to put bread on the table, or while working towards being good enough to write "plumbing", but in their heart, they yearn to hack a kernel or program a robot. (Another group of people dream about starting their own web appp companies). For most of these folks tomorrow never quite arrives, but some of the most "businessy" developers in Thoughtworks dream of writing game engines one day or learning deep math vodoo or earning an MS or PhD. And good people have left TW for all these reasons. And if this is the situation at arguably the best enterprise app development company (at least in the "consultants" subspace) one can imagine the situation in "lesser" companies.

The programmer who has the ability to develop business software and "plumbing" software and chooses to do business app development is so rare as to be almost non existent. Of course, most business app dev types are in no condition to even contemplate writing "hard stuff" but that is a topic for another day.

Paul Graham (admittedly a biased source) says (emphasis mine),

"It's pretty easy to say what kinds of problems are not interesting: those where instead of solving a few big, clear, problems, you have to solve a lot of nasty little ones....Another is when you have to customize something for an individual client's complex and ill-defined needs. To hackers these kinds of projects are the death of a thousand cuts.

The distinguishing feature of nasty little problems is that you don't learn anything from them. Writing a compiler is interesting because it teaches you what a compiler is. But writing an interface to a buggy piece of software doesn't teach you anything, because the bugs are random. [3] So it's not just fastidiousness that makes good hackers avoid nasty little problems. It's more a question of self-preservation. Working on nasty little problems makes you stupid. Good hackers avoid it for the same reason models avoid cheeseburgers."

Strangely enough, I heard Martin talk about the same concept (from a more positive view point, of course) when he last spoke in Bangalore. He explained how much of the complexity of business software is essentially arbitrary - how an arcane union regulation about paying overtime for one man going bear hunting on Thanksgiving can wreck the beauty of a software architecture. Martin said that this is what is fascinating about business software. But he and Paul essentially agree about business software being about arbitrariness. It is a strange sense of aesthetics that finds beauty in arbitrariness, but who am I to say it isn't valid?

Modulo these ideas, I agree with a lot of what Martin says in his blog post. The most significant sentence is

"The real intellectual challenge of business software is figuring out where what the real contribution of software can be to a business. You need both good technical and business knowledge to find that."

This is so true it needs repeating. And I have said this before.To write good banking sofwtare, for e.g. you need a deep knowledge of banking AND (say) the j2ee stack. Unfortunately the "projects and consultants" part of business software development doesn't quite work this way. I will go out on a limb here and say that software consulting companies *in general* (with the rare honorable exception blah blah ) have "business analysts" who are not quite good enough to be managers in the enterprises they consult for and "developers" who are not quite good enough to be "hackers" (in the Paul Graham sense).

Of course factoring in "outsourcing" makes the picture worse. By the time a typical business app project comes to India, most, if not all of the vital decisions have been made and the project moves offshore only to take advantage of low cost programmers, no matter what the company propoganda says about "worldwide talent", so at least in India this kind of "figuring out" is almost non existent and is replaced by an endless grind churning out jsp pages or database tables or whatever. It is hard to figure out the contribution of your software to people and businesses located half a world away, no matter how many "distributed agilists" or "offshore business analysts" you throw into the equation.

This is true for non businessy software also,though to a much lesser degree. The kind of work outsourced to India is still the non essential "grind" type (though often way less boring than their "enterprise" equivalents). The core of Oracle's database software for .e.g. is not designed or implemented in India. Neither is the core of Yahoo's multiple software offerings - the maintenance is, development is not. And contary to what many Indians say, this is NOT about the "greedy white man's" exploiting the cheap brown skins. You need to do hard things and prove yourself before you are taken seriously. Otherwise people WILL see you as cheap drone workers. That's just the way of the world).

And I say this as someone who is deeply interested in business. T'is not that I loved business less but I loved programming more :-).I'd rather read code than abusiness magazine but I prefer a business magazine to say, fashion news. I actually LIKE reading about new business models. I read every issue of The Harvard Business Review (thanks Mack) and have dozens of businessy books on my book shelf along with all the technical books. (I gave away all (300 + books) of my J2EE/dotNet/agile/enterpise dev type book collection , but that is a different story). I am working through books on finance and investing and logistics. I have friends who did their MBA in Corporate Strategy from Dartmouth or are studying in Harvard (or plan to do so). I have friends who work for McKinsey, and Bain and Co, and Booz and Allen, and Lehman Brothers. If I were 10 years younger (and thus had a few extra years to live), I'd probably do an MBA myself. And of course I have spent 10 years (too long, oh, too long) doing "enterprise" software. In my experience (which could turn out to be atypical),the "plumbing" software is way tougher and waaaaay more intellectually meaningful than enterprise software, even of the hardest kind. I might enjoy an MBA, but I will NEVER work in the outsourced business app development industry again even if I have to starve on the streets of Bangalore. I'll kill myself first. So, yeah I am prejudiced. :-). Take everything I say with appropriate dosages of salt.

And just so I am clear, I do NOT think Martin is deliberately obfuscating issues. I think Martin is the rare individual who actually chooses enterprise software over systems software and is lucky enough to consistently find himself working with the best minds and in a position to figure out the business impact of the code he writes. This is very different from the position of the average "coding body", especially when he is selected more for being cheap than because he knows anything useful ("worldwide talent", remember :-) ).

Btw, Martin's use of the word "plumbing" is not used in a derogatory sense (though I've seen some pseudo "hackers" take it that way, especially since the word makes a not-so-occasional appearnce in many of his speeches) but more in the sense of "infrastructure". If you are such a "hacker" offended by the use of the term, substitute "infrastructure" for "plumbing" in his article. That takes the blue collar imagery and sting out of that particular word, fwiw.

I think Martin has very many valuable things to say.

I just think he is slightly off base in this blog post and so I respectfully disagree with some of his conclusions.

But then again, it could all be me being crazy. Maybe enterprise software is really as fascinating as systems software, and writing a banking or leasing or insurance app really as interesting as writing a compiler or operating system.(and Unicorns exist , and pigs can fly).

Hmmmm. Maybe. Meanwhile I'm halfway under the wall and have to come up on the other side before dawn. Back to digging.

Wednesday, July 26, 2006

[Politics] Tip Of The Hat To Israel

I like to think I am "centrist" when it comes to politics and that the world has shades of grey and is not all black and white.

However, in the present Israel/Lebanon-Hezbollah conflict, I sympathize with Israel.

The key characteristic of a nation is that it has (or should have) a monopoly of violence within its borders. As far as I can make out, the Israeli withdrawal from Lebanon was conditional on the *state* of Lebanon reigning in the Hezbollah.

If the Lebanese turn over a chunk of their territory to murdering fanatics trying to impose an archaic religious code on the rest of the world, they can't cry foul when people who lose patience with Allah's foot soldiers invade that territory. Either they control the territory, in which case they should be in control of their borders, not an Islamic militia. Alternatively they don't control it, in which case they have no business screaming about Israeli agression or invasion.

The Israelis, could in an ideal world, have shown a more nuanced approach, but I guess their patience has its limits.

this blog entry says it best.

Saturday, July 22, 2006

Missing Comments Fixed

For some strange reason, some comments did not make it through to my mail account and were piling up in the blogger bin. Some of them were excellent (Mujib , S and some "anonymii" ) and have been approved while a few (all anonymous :-D) along the lines of "I hope you burn in hell, Pagan Devil Worshipper" "U R suXorz" etc have been deleted.

The latter are particularly interesting. I can't imagine someone having a life so empty that they first read a blog they don't like and then laboriously compose a "hate comment" and post it with the full knowledge that it will be deleted with a single mouseclick. Oh well, it takes all kinds to make a world, I guess! I get a laugh out of these outbursts of venom, so please keep sending them.

Apologies to those whose comments were in limbo. As to the person who wanted the comments to "popup" rather than be listed in the permalink page, sorry about that, but *I* hate popups. Still if enough readers want popups, I'll re consider the decision.

That ends the administrivia announcement. Have a nice day.

Friday, July 21, 2006

Status Update aka Thank You for the job offers

In my last post, I wrote

"Aaargh! someone give me a job in the USA (or even better, an admission into a good PhD program) please! I give up on this country!"

A few readers (4 in all) were kind enough to write in with job offers in the USA! Nice! Even better, no one offered me an "enterprise" job. Two were in AI (related) companies and two in compilers.One of those jobs is veeeeery tempting.

Must-resist-temptation :-)

Thank you all. I've written to all of you individually.

That reminds me.Here is a long overdue status update.

I will be working on the "compiler project" (this needs a good name) till about the middle of November.

Then it looks like I will be working with KD building some stuff for his startup. KD and I think very differently so expect some fireworks. He will probably throw me out of the company the very first day :-)

My decision to leave India in 2007 is unchanged. I plan to try for a PhD rather than a job. That way I don't have to play the "H1 game" and sell myself into wage slavery immediately:-). Having said that, one of the job offers had a "higher education allowance" perk.

Hmmmmmm.

Update: The "blogspot ban" is partially removed. The "dangerous" sites are still blocked. Most of them are terrible and not worth reading (Of course I read them all. The best way to get people to do something is to forbid them to do so!). The only (somewhat) interesting nugget was Nathuram Godse (Gandhi's assassin)'s speech from the dock. If the Intelligence Bureau thinks these sites are going to "incite violence" they need their heads examined.

Monday, July 17, 2006

Indians can't see this blog entry

because some f##$$$%%^ ing bureaucrat in the Indian Government has mandated that ISPs block access to blogspot.

If I wanted to live under a dictatorial government that condones this crap. I'd go and live in Iran. Or North Korea.

The bureaucrats responsible (and the weaselly ISP bosses who go along) should be lined up against the nearest wall and shot! I don't pay taxes to have my freedoms taken away by you stupid bastards.

Blogger.com is still accessible so I can post stuff using the managements screens [roll eyes] but I can't read any blogs on blogpsot through my browser.

What's next? Book Burning?

Aaargh! someone give me a job in the USA (or even better, an admission into a good PhD program) please! I give up on this country!

I am fed up with the arbitrariness of life in India. For 10 years I refused to go or work abroad because I wanted to live and work in India. But I'll be damned if I support a systems that actively pits nations people against themselves (with caste based reservation - read discrimination - poised to entrench itself in the private industry) and does crazy things in the name of "national security" (like block blogspot). On one side we have the ruling Indian National Congress plus their traitorous Communist Allies and on the other we have religious fanatics led by the Bharatiya Janata Party, who are in the opposition.

I'd rather live as a janitor in a country where citizenship means something than in slowly decaying "world's largest democracy". Any "patriotism" or "national security" that is fragile enough to be destroyed by some s.o.b nutcase putting up an "anti Indian " blog on blogpsot (I offer a bet at ten to one odds that this will be the reason trotted out. Alternatively someone in the government is trying to shake down google) is not worth preserving anyway.

I used to be proud to be Indian. Now I am ashamed.

If I can't get into a decent PhD program this year I'm going to be one of the "cheap brown ill paid programmer doing crap work and desperate for a green card" types in the USA. Or get on a boat and f#%^ing ROW to the West. Anything to get out, so help me God.

Update: The Indian Government has blocked blogspot because "some terrorists are using blogs to communicate". I'm speechless.

Update: How to by pass the ban

Saturday, July 15, 2006

Algorithmics In The Dark Compiler Alley

My upcoming "write a compiler" project is about writing a industrial strength compiler. The good news is that it is wonderful to be in a position where you get paid to do something like this (vs say, writing yet another J2EE/dotNet/framework du jour [something that starts with "R"? :-P] "enterprise app"). The bad news is that I have to deliver a 2 person year project in about 6 person months ( 1 person - Yours Truly - and about 6 months starting in August).

One way to "shrink" such a project is to use powerful languages. A compiler, while quite complex, has very few library dependencies. What this means is that you can write a compiler in any language you want and not sacrifice any advantages while doing so. The converse of this is that one can abandon brain dead languages like say, Java, and use something truly powerful, like say, Haskell.

I've spent the last few weeks writing 'compiler like' bits and pieces in various languages, and have short listed 3 - Lisp, Scheme, and Haskell. Besides their power, these languages have another feature in common - none of them have an open source, batteries included IDE. (And "no IDE" implies "use emacs". And the sheer customizability of emacs is exhilarating but more on that in another post).

An interesting (and unexpected) development is that evaluating alternative designs calls for a degree of algorithmic sophistication. For example, in the code generation phase of the compiler the decision to use an RTL (gcc's intermediate language)clone vs (say) a BURS (bottom up rewriting system) based graph traversal needs the ability to (mathematically) analyse the competing code gen algorithms and the trade offs of each. The key to getting a grip on BURS, for e.g., is to understand Dynamic Programming. More interestingly, there is often a need to augment an existing algorithm or data structure to accomodate application specific constraints. The paradigm embodied by the language under consideration determines the "shape" of the runtime. The demands of the runtime and the level of optimization required determines the appropriateness of a particular code gen algorithm, which in turn influences the transformations required on the abstract syntax tree, and so on.

There aren't too many books which teach you to design algorithms and data structures (vs memorizing a few standard ones). Of course if you are an "enterprise" programmer, you don't need any of this and can get by with simple lists and hashtables provided by your language's libraries. But if you (intend to) work in more challenging (and, if I may say so, interesting) domains, learn to analyze and design algorithms (and data structures). It might even help you get an interesting and satisfying job. Companies like Google and Amazon have very algorithmics heavy interviews. As Cormen et al say in Introduction To Algorithms (the best book ever written on Algorithms and Data Structures),

"Having a solid base of algorithmic knowledge and technique is one characteristic that separates the truly skilled programmers from the novices. With modern computing technology, you can acccomplish some tasks without knowing much about algorithms, but with a good background in algorithms, you can do much, much more)".

Another interesting, though less comprehensive and more lightweight textbook is The Algorithm Design Manual by Steven Skiena. It is more of a complement to Cormen and Rivest than a substitute and has a distinctive writing style. Every chapter has an accompanying "war story", drawn from the author's extensive consulting experience, where he reports how he applied the technique taught in the chapter to solve a real world problem or optimize (sometimes in a very dramatic fashion) an inefficient solution. Imagine - algorithmics consulting - I guess they won't be outsourcing those jobs anytime soon :-D.

The one "problem" with the Cormen and Rivest book is that it calls for a certain level of mathematical sophistication on the part of the student. This is unavoidable in a book that avoids a "cookbook of algorithms" approach and tries to teach how to design new algorithms and adapt existing ones to teh problem space. So, before you buy this book ( and it IS a book that should be on every programmer's bookshelf) be sure to learn basic discrete mathematics, probability, linear algebra and set theory. Working through Concrete Mathematics really helps. The effort will repay itself a thousand fold.

But be warned. Once you taste the power of algorithms you'll never be able to go back to your "build (or even worse, maintain) yet another Visual Blub dotNet banking app in Bangalore" day job. On the other hand, you may find that programming is challenging, meaningful, and above all, fun. Red Pill or Blue? :-)

Sunday, July 09, 2006

My blog disappeared!

A few hours ago my blog began to turn up with an empty page! No content visible(but the posta re still visible form the admin interface). Hopefully the folks at blogger will fix this soon.

This is atest post to see if creating anew post will recover the blog! (or at least update the atom/rss feeds)

It has reappeared! But have lost all the minor tweaks like my blogroll. Aaaaaargh!!!! Oh well I am too busy now to do any template hacking. Fwiw, the mailing list reveals that many people are having this problem. Google/Blogpsot seems to lose your template once every so many updates (Java threads running amok methinks. Or some el crappo database like mysql screwingup?

The best defence is to maintain a copy of your template somewhere (and your posts too if you value them enough. I don't :-) ).

Tuesday, June 27, 2006

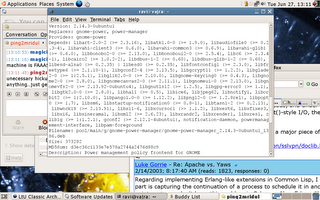

Farewell to Windows

I just switched the Thinkpad over to Ubuntu Linux. I am generally not prone to waxing lyrical about Linux distros, but Ubuntu is really awesome installs flawlessly on the Thinkpad in about 20 minutes flat (and that includes the windows partition resize step).

I had forgotten what it was to mind meld with your computer in the last few years when I used windows + cygwin almost exclusively on my laptop.

I am home again :-)

I just switched the Thinkpad over to Ubuntu Linux. I am generally not prone to waxing lyrical about Linux distros, but Ubuntu is really awesome installs flawlessly on the Thinkpad in about 20 minutes flat (and that includes the windows partition resize step).

I had forgotten what it was to mind meld with your computer in the last few years when I used windows + cygwin almost exclusively on my laptop.

I am home again :-)

Thursday, June 22, 2006

SW Methodology - Shaolin Style

One advantage of blogging is that it enables you to articulate your vague inchoate thoughts more coherently and more importantly, it enables you to develop your thinking. After ranting about the inappropriate use of TDD, I've been able to develop my insights further. Expressing ideas helps to form them as Paul Graham says.

The most fruitful way to think of any pre defined software methodology seems to be that is an encoding of certain practices intended to teach you certain lessons.

In Karate (or any martial art) there are sequences of fixed movements called kata. In music, there are fixed forms called "etudes" (in western music. A rough analogue would be the varna in (South)Indian classical music). Conceptualising a methodology as an "encoding" of what worked for certain people in certain situations is an alternative to "methodology as religion"

The practitioner of a kata (or etude) seeks to perfect each component of the larger structure (a punch in a kata, a particular phrase in an etude) and since absolute perfection is elusive, the practice may go on for years or a life time.

If one thinks of the 12 practices of Extreme Programming (say) as forming a "fixed form" that teaches certain principles of software development (vs as some kind of sanctified system of "rules to follow or you'll burn in hellfire") then it becomes easy to both strive for perfection of each component (say unit tests) while practising, and modifying or discarding the same component during performance as need demands.

Combat is different from kata practice in a dojo. When you are in a dark alley facing someone who tries to gut you with a knife, the important things is to win that fight, not conform to the structure of the kata. When you are practising the kata, the idea is not be creative and do "free flow" but to focus on mastering the form exactly as expressed in the kata. The immediate goal of kata is "perfection of the form" (and the lessons learned therefrom) not "effectiveness in attaining the ultimate purpose".

Guitar Etudes have odd fingerings and chord sequences which will never make it into a lead solo. But when you practice the etude you do what the notation says , not what might best express your musical intent or satisfy an audience. While practising, a gutar maestro will be able to perform an etude perfectly as written in the notation, awkward fingerings and all, but when he is on stage, improvising a solo, he really doesn't think of "exactness of fingering". He focusses on musical effect and allows his fingers to go where they will.

Once you accept that a set of rules telling you how to develop software is just a "fixed form", it is easy to discern the two errors that software methodology practioners (not to mention the "evangelists" who have found the "One True Path") consistently fall into.

The first error is to apply the criteria of practising the form to the performance. iow, to insist that you should fight only using the movements of the kata you learned. "Ohhh you are not writing a test, you are not Agile... Code without tests is 'legacy code' Shame on you ".

The second, and more subtle, error is to apply the criteria of performance to the practice of the form, ie you treat the kata as a combat situation. "Aargh writing tests for javascript is impossible so I won't write any tests because obviously this unit test stuff is impractical" or " Writing tests means I write more code so I will be slower. So I will never write any tests".

Of late there have been some attempts to use the underlying principles of "kata" to improve software development skills. This is the "practise the form perfectly in a non combat situation" approach.

What has been missing is the more important second half -- "combat is not kata". In a hypothetical combat situation, in that dark alley with someone lunging at you with a knife, it isn't very important that you take up the perfect "horse stance" and then do a perfect roundhouse kick. If you end up in hospital, leaking blood from half a dozen stab wounds it doesn't matter if you "took the stance perfectly". As Bruce Lee once said "On the street, no on knows you are a black belt".

In the alley, facing a knife thrust, using a garbage can lid to block the knife and knock the attacker out cold will work fine, nevermind that in Shotokan Karate there are no "Garbage Can Lid Kata".Of course if you've practiced your forms (and other practices like kumite) well for many years, you won't be sweatily flailing around, panting for air and driven by adrenaline, like an untrained person will. You will be in control, using the minimal amount of movement and force and stay balanced all the time , and be aware of things like distance, force, balance, vector of attacks and so on.

You can even learn something from the combat situation and incorporate it into your practice ("hmm fighting in the dark in a cluttered alley is quite different from sparring in well lighted uncluttered dojos so how do I learn to fight better in such conditions?"). Who knows, you may be able to distill your experiences to create a "dark room kata" for others to learn from. And the practitoiners of this "dark room kata" wil in turn diverge from its fixed patterns in their own combat situations. And that is exactly as it should be.

One problem with software development is that "practise" and "performance" are mixed up thoroughly. Both generally happen within the context of a project someone else is paying for. A software developer learns "on the job". It is often impossible to change this state of affairs, but it is helpful to distinguish the "practice" and "performance" aspects , the kata and combat, the etude and the concert.

When you are learning extreme programing, do exactly what Kent Beck teaches in the "white book". When you are actually developing software it doesn't matter if you aren't "exact". If you have difficulties in applying a particular practice, make note and use what works. Later you can think of whether the problem is one of insufficient practice of the technique (in which case you practise more) or whether the technique itself is a misfit for your circumstances (in which case you modify the technique or discard it entirely). There is no intrinsic merit in force fitting the combat situation into the framework of your "school" of fighting. You'll just get stabbed.

There is no "perfect kata" which will reward rigid adherence with universally victorious combat ability. There is no "perfect methodology" which will guarantee success if it is followed "perfectly". Extreme Programming (to use one particular methodology) is not magic. Is is just the encoding of what Kent Beck (and others) learned by trying to get better in the projects they worked on. By all means learn from Kent. Don't worship him (or XP). Ignore the high priced consultants. Your situation and requirements are likely different from what Kent faced. Nobody cares what methodology you follow as long as you write code that delivers value. Arguments about "Shotokan Karate is better than Shaolin Kung Fu" are on the same level as "XP is better than RUP (or waterfall for that matter)".

Combat is not practice. A dojo is not an alley. And vice versa.

Tuesday, June 20, 2006

The 'Cult Of the MBA'

In Joel Spolsky's article, there is one sentence that wish I had written

The cult of the MBA likes to believe that you can run organizations that do things that you don't understand.

On second thought though, the ideas behind that sentence look a little bit more complex than they appear. I have seen managers (used interchangeably with "folks who have mbas" for the rest of this post) have made a (positive) difference. Maybe it is just that competent people, with or without an MBA, do make a difference in situations including them. So the question becomes "Is there a significant differential advantage conferred by an MBA?" Somehow I doubt it.(I am talking of differntials of capability, not differntials of social standing or ability to climb organizational ladders) I have seen too many clueless morons with MBAs screw up situations behind repair.

Taking another vantage point, for a company that is essentially about doing cutting edge, innovative things (with or without software) and redefining the way the world works, it would be insane to hand the driving wheel over to a (non engineer) MBA. Which is probably why Larry and Sergei agonized over selecting their CEO for so long, and why Jobs is btter for Apple than Sculley. OTOH if company is involved in "software services" or "offshoring" or whatever, maybe its makes sense to hand over the reins to an MBA who can then 'manage "resources" ',"scale up operations" etc.

And anyway "cults" are not confined to MBAs. On the geek side of the fence we have the Cult of Apple, The Cult of Agile etc. Nothing new here folks, move right along.

I do NOT use Yahoo 360

so please don't "add me as a friend" in Y360 :-)

I get 2 or 3 mails everyday saying that "Mr/Ms X has requested that you add them as a friend". The thought is appreciated. Seriously. but there is NO point in adding you guys to an account I do not use.

I use practically nothing from Yahoo except a webfacing email id and Yahoo messenger and the latter is for historical reasons, not because I am impressed with its quality. Those of you who know me enough to be "added as friends" please use either my gmail id or ping me on yahoo messenger or skype or... :-) .

I hope I didn't come across as arrogant but I really do NOT like yahoo's flashy ad riddled pages or its Y360 service. Please understand. Thanks in advance.

:-)

Monday, June 19, 2006

Compilers, TDD, Mastery

I was talking to a friend yesterday about the upcoming compiler project when he asked me a question "Will you be coding the compiler in an Agile fashion? I mean using TDD etc?". This turns out to be an intriguing question.

I got sick of the "silver bullet" style evangelization of the Agile Religion and formally gave up "agile" some time ago. However I do believe in programmer written unit tests being run frequently and that is part of "agile". What I do not believe in is the notion of the design "emerging" from the depths of the code like the Venus of Milo from the waters by just repeating the "write-test, write code, refactor" cycle, a practice otherwise known as TDD.

Anyway, I decided to google for compiler s and tdd and came up with some absolute gems.Here's the first.

On the comp.lang.extreme-programming mailing list, I came across this absolutely hilarious exchange.

In the midst of a tedious discussion on whether XP scales to large projects, "mayan" asked,

I am not asking what kinds of companies are doing XP work - I believe a lot of them are, and successfully. What I am asking is anyone using XP for large size/high complexity/high reliability problems. To be more specific - stuff like optimizing compilers, OS kernels, fly-by-wire systems, data-base kernels etc

Ron Jeffries, one of the "gurus" of agile, replied,

. I'm not aware of any teams doing compilers or operating systems using XP, but having done both, I'm quite sure that they could be done with XP, and the ones I

did (at least) would have benefited from it, even though they were successful

both technically and in the market.

Aha this looked interesting! Someone actually thinks a compiler can be written in a XP (and presumably TDD) fashion. Mayan issued a challenge to Ron which looked like this

Excellent: lets talk about the following problem: write an optimizing

back-end for a C compiler (assume we purchased the front-end from

someone). How would we use XP, or adapt XP to it?

Some problems with compiler back-ends:

- its fairly clear what has to be done (core

{intermediate-language/instruction

selection/register-allocation/code-generation} + set of optimizations);

its fairly clear what order they have to be done in (first the core,

then a particular sequence of optimizations)

- you don't really need customer input. Having a customer around doesn't

help.

- you can't demo very much till you have a substantial portion of the

code written - the core (say about 30-60klocs) - no small (initial)

release

- you had better get your IL (intermediate language) data-structures

right - if you pick ASTs or 3 or 4 address form, you will do fine for

the basic "dragon-book" optimizations, but later on you will either run

into severe problems doing more advanced optimizations, or you will have

to rewrite your entire code base [probably about 100-150klocs at this

time]. Is this BUFD?

- you had better think of performance up front. Toy programs are easy;

but a compiler has to be able to handle huge 100kloc+ single functions.

Many heuristics are N^2 or N^3. Both run-time efficiency and memory

usage end up being concerns. You can't leave optimization till the end -

you have to keep it in mind always. It also pretty much determines your

choice of language and programming style.

- TDD may be applicable for some of the smaller optimizations; on the

other hand, for doing something like register-allocation using graph

coloring, or cache blocking - I wouldn't even be able to know where to

begin.

- The basic granule (other than the core) is the optimzation. An

optimization can be small (constant propagation in a single basic block)

or large (unroll-and-jam, interprocedural liveness analysis). The larger

ones take multiple days to be written. Integrating them "often" is

either pointless (you integrate the code, but disable the optimization)

or suicidal (you integrate the code, but it breaks lots and lots of

tests; see below). Best case: integrate once the optimization is done.

- Its not easy to split an optimization into subproblems; so typically

one (programmer/pair) works on an optimization. For the larger ones, if

it needs to be tweaked, or fixed, the unit that wrote it is the best

unit to fix it. The overhead to grok a couple of thousand lines of code

(or more!) vs. getting the original team to fix it is way too high.

- Testing, obviously, is a lot more involved. Not only do we have to

check for correctness, we have to check for "goodness" of the produced

code. Unfortunately, many optimizations are not universally beneficial -

they improve some programs, but degrade others. So, unit testing cannot

prove or disprove the goodness of the implementation of the

optimization; it must be integrated with the rest of the compiler to

measure this. Further, if it degrades performance, it may not be a

problem with that optimization - it may have exposed something

downstream from it.

- Typical compiler test suites involve millions of lines of tests. They

tend to be run overnight, and over weekends on multiple machines. If you

have a badly integrated optimization, you've lost a nights run. And

passing all tests before integration is, of course, an impossibility.

Even a simple "acceptance" set of tests will check barely a small

percentage of the total function in the compiler.

Hmmm.....does this still look a lot like XP to you? I can see that at

least 1/3rd of the XP practices being broken (or at least, severely

bent).

Based on your experience, do you disagree with any of the constraints I

outlined?

Mayan

I expected Ron to post a reply explaining how all the above can fit into the Agile/XP framework, but there was only [ the sound of crickets chirping].

On the XPChallengeCompilers page, Ron repeats his claim that "I've written commercial-quality compilers, operating systems, database management systems ". Hmm. Yeah. Whatever. He doesn't make any useful points about how one would actually go about doing something like this.

On the same page, Ralph Johnson has (as one would expect) a more thoughtful and articulate view point about how XP would apply to writing a (simple) compiler, but he focusses more on the fact that " DoTheSimplestThingThatCouldPossiblyWork is true for compilers, as well. My problem is that people seem to think that the simplest thing is obvious, where my experience is that it is often not obvious. One of the things that makes someone an expert is that they KNOW the simplest thing that will really work."

Now this makes sense. There is no hint of the design "emerging" from "average" developers grinding through the tdd cycle and there is a strong hint that you do have to understand the domain of a compiler well before you can even conceive of the "simplest thing". He concludes with "The XP practices will work fine for writing an E++ compiler. However, I think there will need to be some other practices, such as comparing code to specification, as well as appreciating the fact that you sometimes must be an expert to know what simple things will work and which won't".. Ahh the blessed voice of rationality.

Another area where Agile falls flat on its face is when dealing with concurrency. See the XP Challenge concurrency page for the flailing attempts of an "agilist" to "design by test" a simple problem in concurrency. Tom Cragill proposed a simple synchronization problem and Don Wells (and some of the agile "gurus") attempted to write a test exposing the bug and didn't succeed till Tom pointed the bug out.

Coming back to compilers, there are projects like the PUGS project, led by the incredible Autrijus Tang, in which unit tests are given a very high priority. I couldn't find any references to the PUGS design "evolving" out of the TDD cycle however. It seems as if they build up a suite of programmer written tests and run it frequently.I can see how that practice would be valuable in any project. Accumulating tests and an "automated build" are the practices I retained from my "agile" years. AFAIK the pugs folks don't do "TDD", expecting the design to emerge automagically.

This whole idea of "emergent design" (through TDD grinding) smacks of laziness, ignorance and incompetence. Maybe if you are doing a web->db->web "enterprise" project you can hope for the TDD "emergence" to give you a good design, (well, it will give you a design :-P) but I would be very surprised if it worked for complex software like compilers, operating systems, transaction monitors etc. Maybe we should perusade Linus to "TDD" the Linux kernel. TDD-ShmeeDeeDee! Bah! :P

Update: Via Jason Yip's post, Richard Feynman's brilliant speech exposes what exactly is wrong with the practice of "Agile" today. An excerpt

I think the educational and psychological studies I mentioned are examples of what I would like to call cargo cult science. In the South Seas there is a cargo cult of people. During the war they saw airplanes with lots of good materials, and they want the same thing to happen now. So they've arranged to make things like runways, to put fires along the sides of the runways, to make a wooden hut for a man to sit in, with two wooden pieces on his head to headphones and bars of bamboo sticking out like antennas--he's the controller--and they wait for the airplanes to land. They're doing everything right. The form is perfect. It looks exactly the way it looked before. But it doesn't work. No airplanes land. So I call these things cargo cult science, because they follow all the apparent precepts and forms of scientific investigation, but they're missing something essential, because the planes don't land.

Saturday, June 17, 2006

[DevNote] Interpreter To Compiler

I generally use a wiki (moinmoin in case anyone is interested) on my laptop to keep notes on various projects I work on. However the wiki has not been transferred from my old laptop to my new one. With all the travellng I am doing, this won't get done till the next month or so. Meanwhile I'll use this blog to record (some of) my dev notes (when i can get net access ...sigh.....). Since Blogger doesn't support tagging or categories, I'll prepend a "[DevNote]" string to the title of the post so readers can ignore the post if they wish. These notes are likely to be cryptic and incoherent and most readers should just skip them.

To transform an interpreter to a compiler,

- Rewrite interpreter in CPS form.

- Identify Compile time and Runtime actions(CPAs and RTAs)

- Abstract RTAs into separate 'action procedures'.Use thunks to wrap any weird control structures where necessary

- Abstract Continuation Building procedures (CBPs)

- Convert RTAs and CBPs into records. Now the eval on the parse tree spits out a datastructure.

- Write an evaluator for the resulting data structure making sure to (a) implement register spilling (b) 'flatten' code to generate "assembly like" code.

- TODO : investigate the pros and cons of Bottom Up Rewriting Systems (BURS) vs the scheme above vs generating a (gcc) RTL like intermediate language

- TODO : investigate the effects on stack discipline and garbage collection when the various schemes are adopted . Which is more convenient?

Thursday, June 01, 2006

StockHive goes live

I am still internet challenged and will be offline for the next month or so, but this is worth commenting on.

My friend Yogi has launched StockHive, an online tool for folks investing in India's stock markets.

If you are an investor, you should definitely look at StockHive. For the techies amongst you the site is built on Rails.

Thursday, May 11, 2006

Blog Lockdown

I will be travelling for the next couple of months and will have zero network access. Athe commenting facility on this blog have been disabled. I will re enable them when I get back to civilization in mid July.

Au Revoir

Wednesday, May 10, 2006

The Decline and Fall Of India

The present government has proposed an utterly idiotic caste-based reservation in higher education and in private industry. In other words, get ready to fill in a religion/caste form to determine if you'll get an IIT admission, get a job, get promoted etc.

Atanu says it much better than I can. Read. Learn.

Well, I vote with my feet. I am getting out of this stupid country next year.[tongue attached to cheek mode on]I wonder if China issues citizenships to foriegners. Of course I'd be arrested in 10 days for "anti communist beliefs" but it would be fun while it lasted. Might be worth considering if China ever became a democracy[tongue attached to cheek mode off]

Monday, May 08, 2006

Abandoning Agile

My friend Yogi said in a chat session recently, "I havent seen your blog entry renouncing all things agile!"

Yogi, your wish is my command. here it is.

Bill Caputo said it much better than I ever could. So I'll borrow from his blog entry.

"...In short,... I am formally retiring from Agile Evangelism (something I lost the heart for some time ago), and simply becoming an Agile Private-Citizen. Thus, I expect that the tone of my writing will shift somewhat as I no longer care (and truthfully haven't for some time) whether the world adopts XP -- or even whether anyone does -- I am simply interested in building software to the best of my ability, working with others of like mind, and flat-out outperforming others using the techniques and tools that I have learned how to use over the past several years. That anyone familiar with XP would immediately recognize its strong impact on the nature of our software development project is almost (but not quite) irrelevant to that goal. ..."

Of course the difference between Bill's position and mine is that he still continues to use XP. He just doesn't evangelize it. The kind of problems on which I'll be working for the rest of the year (and hopefully, for the rest of my life) shift the context of development so much that most of the practices and assumptions of XP/Agile just don't make sense anymore. The only surviving practices are the constantly growing test suite (no more test first) run periodically and a very attenuated Continous Integration step. Where Bill is an Agile Private Citizen, I am an Agile Ex-Citizen.

I have also stepped out of the Agile Software Community Of India, a non profit society of which I am a founding member. I may still need to attend one final meeting to elect the next year's office holders, and help resolve some residual organizational issues but that's it. I am done with all things Agile.

It is a great relief. Agile is "hot" these days and all sorts of weirdos crawl out of the wooodwork to scramble on the "Agile" platform and caper and scream to attract "agile consulting" assignments. The politics is sickening. Now I can just shut these folks out and focus on what is more important, writing good software.

Sunday, May 07, 2006

Grounding "exotic" technology - The Banker's story

The machine learning system I was called in to design for a Telecom Fraud detection company is moving into production. I was told yesterday that the testing folks are "amazed at the high accuracy of the new codebase" and my client is extremely satisfied with the improvements. I was really happy to hear this. Nothing makes a programmer happier than news that his code/design is making a substantial contribution in the domain for which it was written.

My clients were leaders in their domain even before we added the new ML capability. With the enhancements (not limited to the fraud detection engines, they've added a lot of functionality in other areas) they should wipe the floor with the competition. None of their competitors has a fraud classifier system as powerful. And we have implemented only a small fraction of what could be done.

This datapoint just confirms by belief that powerful technology allied to a strong management vision can lead to massive value addition. What made the difference is the adoption of a key metric(erroneous classification/million cell phone calls) as the measure of the software's quality and the almost total freedom given to the implementation team (which included some of the best folks in the company). We had NO political or turf battles and the laser like focus of the manager in charge on the key metric helped us to evaluate alternate strategies and select the most appropriate ones. This (tying the software developed to key business metrics) is one way of "grounding" business software and preventing thrashing. (More explanation of this conmcept coming up in future blogs).

Back to Banking. One of the nuggets that came out of our conversation(see last blog) was the fact that most Indian banks still do manual verification of signatures on cheques. This has been automated to a large degree a long time ago in most banks in the West. The algorithms for this are available in the literature. Large chunks of software that made up the original solution are publicly available. All it needs is for someone to put together a hardware/software combination to provide this functionality and the banks would jump at it.

Why is this important? Here is the Industry context. In 2009, the Indian Banking sector will be opened to Foreign banks . (It is semi-open right now). That would mean(assuming India is doing well economically) that tidal wave of foreign banks would arrive and the existing banks would face real competition.

The scariest part of this impending shift in the landscape, from the point of view of Indian Banks is the massive technological advantage that western banks enjoy (relative to the Indian banks). And in the Indian banking scenario, market share is almost directly in proportion to the technology level of each bank.

Here is an example. For years, only ICICI offered an "at par cheque" facility, ie, the ability to present a cheque drawn on an account in another city and get it cashed instantaneously (vs. waiting for few days for the cheque to be couriered to the city in which the account resides). Thousands of businessmen did not have an alternative but to bank with ICICI because no one else had anything similair.

By the time the other banks caught up with this technology, ICICI came up with a feature by which transfers between accounts could be transacted on the net (even if the target account was in another bank). As of today no one else has this feature(unless they go through ICICI) and this gives them an edge.

I forget the domain term for this. But the point is that the ranking in the technical arms race almost directly determines the marketshare. And this marketshare is what is under threat when the big boys land with their deep pockets and massive mainframes. So even something as simple as automated cheque signature verification could provide an edge in the impending blood bath in the banking sector.

So there. Now you have an idea on how to make millions. If you do, cut me a cheque for the idea. :-)

A Thought Experiment

I recently had a conversation with a friend who works in ICICI, one of the largest banks in India. Contrary to my "image" I am deeply interested in Business and the "enterprise"; I belive the practice of outsourcing the software at the heart of an enterprise to body shops flogging mediocre programmers is indefensible. My friend was kind enough to enlighten me on how banking really works and the context in which Indian Banking operates. The next few blog entries will be devoted to insights which spun off rom that conversation. First though, I'd like to focus on the gaps between image,ideal and reality

As with all industries (e.g the outsourced software "industry") there is an image, ("intellectually challenging work done by extremely bright people trying to change the world" in the case of the Indian software industry) which people outside the industry believe and a not-so-cool reality which only insiders are aware of. The reality of India's software "industry" is that most "hackers" working in it are comparable to the illegal immigrants from Mexico who come to swanky Wall Street offices early in the morning to vaccum and dust. The difference is that the average mexican immigrant who does these jobs does not fool himself into thinking he is doing "cutting edge" work.

The interesting thing is that beyond these two perceptions ("What does IndustryX look like from the outside?" and "What is IndustryX really like (from the inside)?") there is another question "What could IndustryX be like if we tried to really deliver superior customer value and create first class working environments and did first class work?".

Very few companies are trying to envision and implement the last question in any industry,whether it be software, banking or anything else.

Let us do a thought experiment and envision a future in which India did less of the "janitorial" programming, and becomes a true "software super power". In such an imaginary point in the multiverse,

- India would be home to innovative companies like (say) Apple and Google .

- At least one popular language (like, say, Ruby), Operating Sytem (like Linux) , or widely used tool (like say, emacs) would be invented by an Indian. .

- People all over the world would expect a newly announced fantastic advance in software to have an Indian (vs an American) origin. At least afew "iconic" thought leaders (of the calibre of Linus Torvalds or Steve Jobs) would be Indian.

- Many Indians would be publishing the "killer books" in various subfields of software theory and practice. Take the best technical book you have read. Now imagine it having an Indian author to see what I mean.

- India would have annual events like the DARPA Robotics Car Race, with relentless pushing forward of the frontiers of knowledge and possibility every year.

- The most talented and ambitious students from other countries would apply in droves to Indian Educational Institutions and the selection commitee would have the luxury to pick and choose the best.

- Harvard and MIT would learn from IIMs and IIT and try to imitate their methods and "best practices".

- Bangalore would be the California of the world, with the most innovative people , companies, and ideas finding their natural home there.

- People all over the world would want to immigrate to India and acquire Indian citizenship. Our consulates in the USA and UK would be forced to impose quotas on work and other immigrant visas.

- The core of a new software system would be designed and implemented in Bangalore and the "janitorial" work would be "outsourced" elsewhere. (Yes, I see you ROTFL :-) I told you this is all imagination. A man can dream can't he? :-))

Tuesday, April 18, 2006